Gemini 2.5 Flash

Fast, Efficient AI with Controllable Reasoning

5.0•18 reviews•893 followers

Fast, Efficient AI with Controllable Reasoning

5.0•18 reviews•893 followers

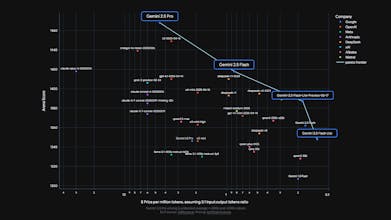

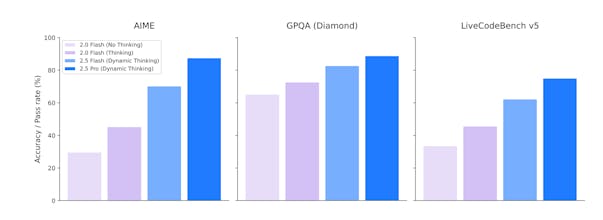

Gemini 2.5 Flash, is now in preview, offering improved reasoning while prioritizing speed and cost efficiency for developers.

This is the 2nd launch from Gemini 2.5 Flash. View more

Gemini 2.5 Flash-Lite

Gemini 2.5 Flash-Lite is Google's new, fastest, and most cost-efficient model in the 2.5 family. It offers higher quality and lower latency than previous Lite versions while still supporting a 1M token context window and tool use. Now in preview.

Launch Team

QuizzMe

QuizzMe

Gemini 2.5 Flash-Lite is a fantastic leap forward! The balance between speed, cost efficiency, and quality is exactly what developers need for high-volume tasks. I’ve been excited to see how it improves performance without compromising on accuracy.

Gemini 2.5 Flash is now in preview, and it strikes a great balance—offering noticeably better reasoning while keeping things fast and cost-efficient. It's a strong choice for developers looking to build smarter apps without sacrificing performance.

Super excited to see the Gemini 2.5 model family evolving — especially the 1 M-token context window and improved reasoning capabilities across modalities. The advancements in code generation are particularly interesting — curious to see how it performs on real-world API workflows.

A quick question: does the Flash‑Lite edition maintain consistent latency when running on mobile or resource-constrained environments? Would love to understand its optimisation approach there.

Planning to experiment with Gemini 2.5 soon for API integration and code-heavy tasks — has anyone benchmarked it yet for code-gen performance (vs. previous Gemini or other LLMs)?

Kudos to the Google DeepMind team — stellar work on the benchmarks!