Airtrain.ai LLM Playground

Vibe-check many open-source and proprietary LLMs at once

385 followers

Vibe-check many open-source and proprietary LLMs at once

385 followers

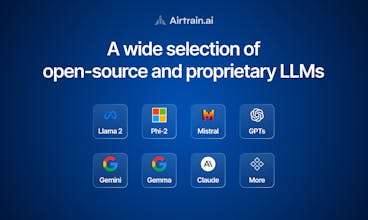

A no-code LLM playground to vibe-check and compare quality, performance, and cost at once across a wide selection of open-source and proprietary LLMs: Claude, Gemini, Mistral AI models, Open AI models, Llama 2, Phi-2, etc.

Airtrain.ai LLM Playground

Startup Digest

Airtrain.ai LLM Playground

Airtrain.ai LLM Playground

Airtrain.ai LLM Playground

Airtrain.ai LLM Playground

Airtrain.ai LLM Playground

Airtrain.ai LLM Playground

LangWatch Agent Simulations

Airtrain.ai LLM Playground

Airtrain.ai LLM Playground

Airtrain.ai LLM Playground