OAK 4

A complete robotic vision system in a single device

86 followers

A complete robotic vision system in a single device

86 followers

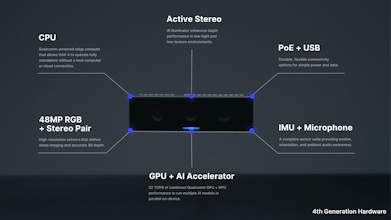

A complete AI vision system packaged in a single edge device: 52 TOPS compute, high-resolution sensing, stereo depth, and rugged industrial design. Together with Luxonis Hub for cloud control and continuous improvement, it provides an end-to-end platform for deploying and scaling computer vision in the physical world.

Hi everyone! I’m Hunter, CPO at Luxonis. Excited to share what I believe to be a truly transformative technology with you all.

For decades, most innovation has been purely digital. Our cities, factories, and infrastructure barely feel different; we just stare at screens more. And while the AI boom shows promise, this audience knows better than anyone that all it’s really produced is an overflowing pile of “AI agents” that do everything except interact with the physical world - even though nearly 40% of jobs require real movement and most of life still happens offline.

That’s why we built OAK 4: to bridge this gap by bringing AI out of the cloud and into physical environments.

Cloud-based vision is too slow and too expensive, and wiring together edge computers and sensors adds complexity and failures. OAK 4 combines powerful compute, high-quality sensors, an easy SDK, and cloud device management into one self-contained platform. OAK 4 is designed to make real-world AI actually deployable: faster prototypes, easier scaling, and no extra hardware.

It’s the eyes, ears, and brain of modern and actually intelligent robotics and automation.

We would love your feedback!

ProductAI

Let's GO Luxonis!

Can't wait to try the new and improved camera.

Our company uses their previous models in production and they really shine with on-device computing power. This new one will be even easier to setup and deploy!

@ziga_kerec This is great! Thanks for the support.

So proud to be part of this! It was a rough but exhilarating ride to get here. Seeing all this functionality come together in a single product is almost unbelievable!

I would just like to add that OAK 4 has also full out of the box ROS integration!

I often see realsense or Zed used similarity, whats the main difference here?

@jack_duncheskie ZED and RealSense are great depth sensors, but they stop there. OAK 4 gives you depth plus a full on-device vision/ML pipeline. You can pull high-res RGB, depth, and IMU data, run models like YOLO or DINOv3 directly on the device, fuse detections with depth, and stream just the results. no GPU, no host PC, no bandwidth burn. It’s powerful enough for YOLOv8-Large at ~85 FPS and light models at 500+ FPS, so you can build an entire CV stack right on the camera.

This is a much needed switch up from constant software releases. I’m new to computer vision, what kind of expertise is needed to get started with this?

@marin_bomgaars The best part of OAK 4 + Hub is that you don’t need to be a CV expert to start. You can run examples right from our App Store and see depth/detections in minutes. Simple use cases (counting, basic inspection) are very beginner-friendly with tools like Roboflow, while advanced autonomy does require more expertise.

Our Python SDK is designed to be super easy. Lowering the barrier to real-world vision is a core mission for us.

I noticed the switch to a Qualcomm-based architecture. Where does computing actually happen now (CPU/GPU/NPU/EVA), and how does the stereo depth performance compare to previous generations?

@anthony_bonadonna Hey nice question - with OAK 4 we moved to a hybrid compute setup. CPU runs the apps + ROS, GPU does image work, EVA runs super light-weight depth estimation, and the NPU handles all the AI plus neural stereo depth. That neural stereo piece is what really boosts depth vs older OAKs: sharper edges, fewer holes, and room for even more advanced depth models going forward. 52 TOPS gives the device a lot of power to work with to increase the depth capabilities