LocalLiveAvatar

Instant Live Avatars with Lip-Sync on Everyday Hardware

11 followers

Instant Live Avatars with Lip-Sync on Everyday Hardware

11 followers

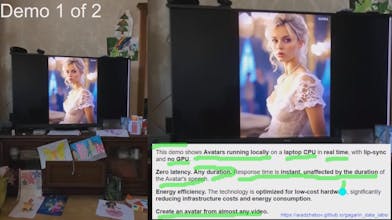

Unlike other solutions, LocalLiveAvatar runs on everyday hardware—no high-end GPU or cloud required. Response time is instantaneous—whether the avatar speaks for 2 seconds or 20 minutes. No expensive hardware. No cloud. Just your secure data. An avatar can be generated from almost any video or photo. Once created, it can voice any text or audio with perfect lip synchronization in any language.

AbleMouse

AbleMouse

I'm ready to: provide a detailed demo, answer all your questions.

For interested parties, I can arrange a demonstration as follows: we connect via a conference call, you send your audio file to me through my Telegram bot, and we immediately see live avatar speaking your text.

AbleMouse