LlamaChat

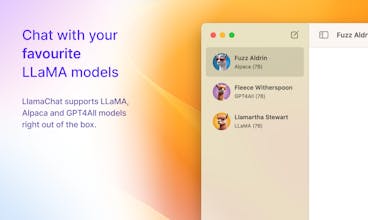

Chat with your favourite LLaMA models right on your Mac

39 followers

Chat with your favourite LLaMA models right on your Mac

39 followers

LlamaChat allows you to chat with LLaMA, Alpaca and GPT4All models, all running locally right on your Mac. Support for Vicuna and Koala coming soon. NOTE: LlamaChat requires obtaining model files separately, adhering to each source's terms and conditions.