Launched this week

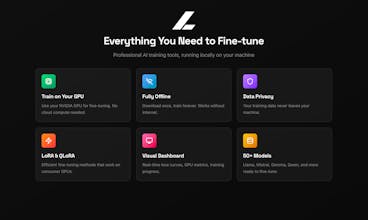

Langtrain

Postman for fine-tuning LLMs

14 followers

Postman for fine-tuning LLMs

14 followers

Train AI models locally, use offline forever. Langtrain Studio fine-tunes Llama, Mistral, Gemma on your GPU, no cloud uploads, no per-token fees. Download once, train offline. Real-time dashboard, LoRA/QLoRA support, one-click export. Your data stays on your machine. Own your AI infrastructure

Hey Product Hunt! 👋

I'm Pritesh, the founder of Langtrain.

The problem that frustrated me: Every time I wanted to fine-tune an LLM, I ended up spending more time on boilerplate code, CUDA errors, and memory management than on the actual AI work. It felt like I needed a PhD just to customise a model.

The "aha" moment: I realised developers don't need another library — they need an interface. Just like Postman revolutionised API testing by making it visual and intuitive, I wanted to do the same for AI fine-tuning.

What Langtrain does:

Pick a model from 80+ options (LLaMA, Mistral, Qwen, DeepSeek...)

Upload your dataset (we handle the formatting)

Click "Train" — that's it. No Python. No GPU nightmares.

Deploy your custom model to production in seconds

Why I'm excited: Fine-tuning is the real unlock for AI. It's how you go from "generic chatbot" to "AI that actually knows your domain." And it should be accessible to everyone, not just ML engineers.

I'd love to hear: What would you fine-tune a model for?

Drop your use case below — I'll personally reply to every comment! 🧡