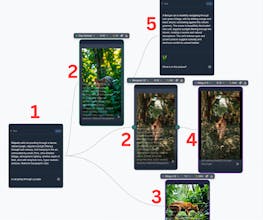

Your AI art copilot. Drag-and-drop “nodes” (text, image, video) onto a whiteboard and chain AI text, image and video AI models together Pipe text or image output to other nodes to refine prompts, turn them into videos or explain images. Easily compare model

AutoPosts AI

@edrick_dch Really like the idea of Blooming! It sounds like a great solution to the chaos of managing multiple AI tools. I agree, real-time collaboration would be a great addition, and the audio model could definitely be cool for expanding creative possibilities. Keep it up, excited to see how it evolves!

@edrick_dch Local model support? Something like a frontend extension for ComfyUI. That will actually be useful to a lot of DEVs.

Shit Drop Game

I tried using Blooming, and I think it will be very useful for creative directors and film makers.

One quick recommendation is, it will be much nicer if the generations take place in parallel.

The video generation was blocking the whole pipeline at the beginning, and I thought something was wrong for a while.

AutoPosts AI

@jeahong Thank you for the feedback and trying it out! You're right, the video generation currently blocks - working on a fix. Also realizing UI could communicate better to the user on how long it will take to generate a video (few minutes).

Does this have any link up with Sand.ai (Magi video gen's cloud infra) The canvas mode image to video gen is eerily similar. Nonetheless, this was a great concept, so is this, BYOK + clean frontends are such a convenience.

As a generative artist working with text, images, and video, this feels like a visual command center for creativity. Dragging nodes, linking models, and seeing it all come together makes the whole process smooth and fun.

I don’t know. The interface is not intuitive.

I guess this is not for me?