FastVLM

Lightweight open-source vision models for Apple devices

5 followers

Lightweight open-source vision models for Apple devices

5 followers

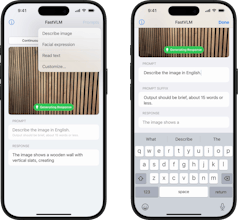

FastVLM is Apple's open-source vision encoder for efficient on-device VLMs. Enables faster processing of high-res images with less compute.

Flowtica Scribe

Hi everyone!

Apple has released FastVLM, an interesting open-source vision model designed specifically for efficiency. It's notably lightweight and fast, making it suitable for running directly on edge devices – with native support optimized for Apple Silicon.

This focus on efficient, on-device processing marks a significant step away from the heavy reliance on cloud compute typical for many powerful Vision Language Models. It pushes forward the idea of smartphones and other devices becoming more autonomous AI platforms.

By combining this efficient vision encoding with the optimization potential of Apple's Neural Engine, FastVLM could very well form a core part of future system-level AI features within iOS and other Apple ecosystems.

It’s a glimpse into how powerful AI can run locally.