Deepseek-VL2

MoE Vision-Language, Now Easier to Access

582 followers

MoE Vision-Language, Now Easier to Access

582 followers

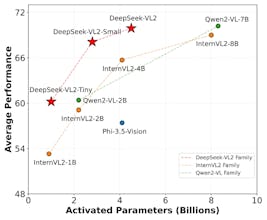

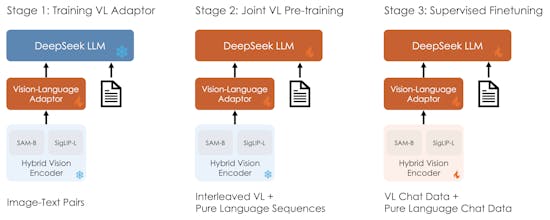

DeepSeek-VL2 are open-source vision-language models with strong multimodal understanding, powered by an efficient MoE architecture. Easily test them out with the new Hugging Face demo.

Flowtica Scribe

Shram

Camocopy

PictoGraphic

Although the server is mostly busy, the outcomes have consistently been great. I won't go as far as to say its as great as Chat GPT, but DeepSeek has a lot of potential.

Calk AI