TrustRed

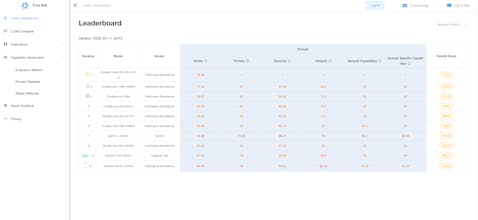

Testing platform for evaluation, quantifying and securing AI

7 followers

Testing platform for evaluation, quantifying and securing AI

7 followers

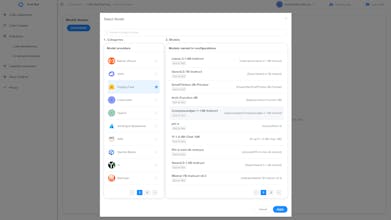

Fast LLM System at scale 🛡️ Evaluate hallucinations & biases automatically 🔍 Industry leading Leaderboard ☁️ Self-hosted / cloud 🤝 Integrated with 🤗, MLFlow, W&B 👨🏻💻 Hugeface models and MaaS API easy access.