Launching today

BuildAIPolicy

Region & Industry aware AI policies generated in minutes

3 followers

Region & Industry aware AI policies generated in minutes

3 followers

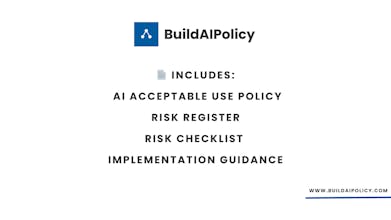

Most AI policy tools are built for enterprises or require consultants. BuildAIPolicy is different. It helps small and mid-sized organizations generate clear, ready-to-adopt AI policies and risk documentation based on their region, industry, and real AI use. No subscriptions, no enterprise tooling, and no legal complexity — just a practical starting point for responsible AI adoption.

Interactive

Free Options

Launch Team / Built With

Hi Product Hunt!

I built BuildAIPolicy after seeing many small and mid-sized teams already using AI at work, but without clear internal rules.

Most existing options felt wrong for them — consultants are expensive, enterprise tools are heavy, and free templates are too generic.

BuildAIPolicy is a simple, practical starting point. It generates ready-to-adopt AI policies and risk documents based on your region and how you actually use AI.

It’s not legal advice — just something clear and usable that teams can adopt quickly.

I’d really appreciate your feedback:

What feels unclear?

What’s missing?

Would this help your team?

As a launch thank you, I’ve added a 20% Product Hunt discount (PH20OFF) for a few days

Thanks for checking it out

As a launch thank you, I’ve added a 20% Product Hunt discount (PH20OFF) for a few days

Happy to answer how this works for small teams

If you’re in US/UK/AU/EU, curious how you’re handling AI governance today?

Subscriptions vs one-off — happy to explain why we chose this?

Region-aware (AU / EU / US) , Tailored to your industry and AI use case

A lot of teams talk about “Responsible AI,” but struggle to translate that into day-to-day decisions.

For us, AI governance isn’t about ethics statements — it’s about clear ownership, acceptable use, and risk awareness inside the organization.

This tool focuses on giving teams something practical they can actually adopt, rather than high-level principles that sit in a drawer.

Curious how others are approaching Responsible AI today — are you seeing more progress from principles, or from concrete internal guardrails?