Launching today

resillm

Production-ready resilience for LLM applications

1 follower

Production-ready resilience for LLM applications

1 follower

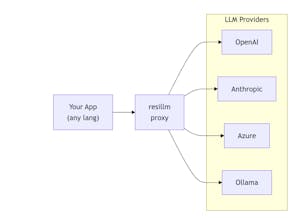

resillm is a resilience proxy for LLM applications. Add automatic retries, provider fallbacks, circuit breakers, budget controls, and Prometheus metrics to any LLM app. Works with OpenAI, Anthropic, Azure, Ollama. Zero code changes.

Free

Launch Team

AssemblyAI — Build voice AI apps with a single API

Build voice AI apps with a single API

Promoted

Hunter

📌Report