Launching today

ModelRiver

One AI API for production - streaming, failover, logs

57 followers

One AI API for production - streaming, failover, logs

57 followers

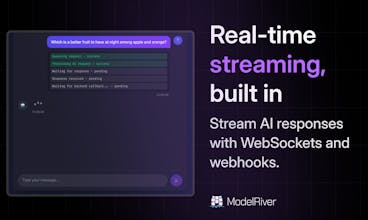

Unified AI API gateway for multiple LLM providers with streaming, custom configs, built-in packages, routing, rate limits, failover, and analytics.

Hi Product Hunt,

We’re Akarsh and Vishal, the co-founders of ModelRiver. Thanks for checking it out.

Why we built ModelRiver

We’ve both spent a lot of time building AI-powered products, and we kept running into the same problems. Every AI provider came with a different SDK and set of quirks. Streaming behaved differently across models. Switching providers meant rewriting code. And when a provider had an outage, the application went down with it. Debugging was also painful because logs and usage were spread across multiple dashboards.

We realized we were spending more time maintaining AI infrastructure than building the actual product.

What we wanted to solve

We set out to build a single, OpenAI-compatible API that works across providers, without locking teams into one vendor. As we spoke with more developers, it became clear that abstraction alone wasn’t enough. Production systems need:

- consistent streaming

- automatic failover and retries

- unified logs and usage

- the ability to bring your own API keys

ModelRiver is our attempt to make AI infrastructure reliable, observable, and easy to reason about.

How the product evolved

Early versions focused mainly on multi-provider support. Feedback from early users pushed us toward reliability and observability. Streaming stability, failover behavior, and logs became core features rather than optional add-ons.

ModelRiver is still early, but it’s already being used in real applications, and we’re iterating quickly based on real-world feedback.

We’d love to hear your thoughts, questions, or what you’d like to see next.

Another area we spent a lot of time on is integration testing.

When teams are wiring AI into an application, most of the cost and friction happens before anything ships. You want to test request flow, streaming, retries, fallbacks, and error handling, but you don’t want to burn real credits while doing that.

In ModelRiver, users can turn on a testing mode that lets them exercise the full integration without costing them anything. This makes it possible to:

- validate request and response handling

- test streaming and partial responses

- simulate retries and failover paths

- verify logging and usage reporting

- run CI or staging tests safely

The goal is to let teams treat AI integrations like any other dependency. You should be able to test them thoroughly before production, without worrying about cost or side effects.

If you’re doing integration testing with LLMs today, I’d be curious to hear what’s been painful or missing.