Blackman AI

Reduce tokens. Improve responses. Route smarter.

6 followers

Reduce tokens. Improve responses. Route smarter.

6 followers

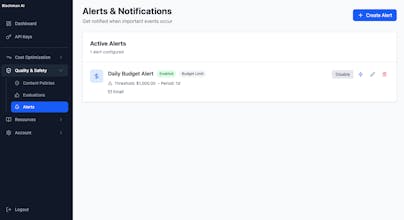

AI performance shouldn’t be a mystery - or expensive. Blackman AI gives you real-time insights into your LLM usage and actively improves it. We optimize prompts, route intelligently across hundreds of models, block malicious inputs, and boost response quality while cutting unnecessary cost. Point your tool's LLM calls to Blackman AI and you’re good to go.