Toonifyit

Cut AI token costs 30-60% with smarter JSON encoding

5 followers

Cut AI token costs 30-60% with smarter JSON encoding

5 followers

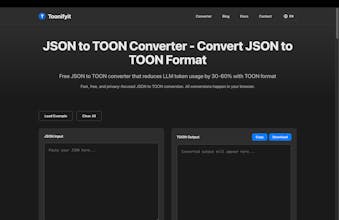

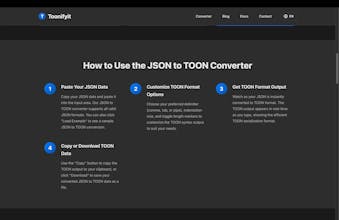

Transform how you send data to LLMs. Toonify converts JSON to TOON format, achieving a 30-60% token reduction through intelligent tabular array detection. Perfect for developers working with uniform datasets, analytics, or API responses. Unlike JSON's repetitive structure, TOON declares keys once and uses CSV-like rows, dramatically cutting costs when calling GPT-4, Claude, or any token-based AI. Open source JavaScript implementation with simple API integration.

Free

Launch Team

AssemblyAI — Build voice AI apps with a single API

Build voice AI apps with a single API

Promoted

Maker

📌Report