LLM Session Manager

Monitor & collaborate on Claude Code, Cursor, Copilot

25 followers

Monitor & collaborate on Claude Code, Cursor, Copilot

25 followers

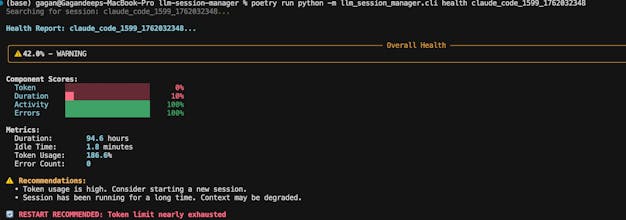

The first monitoring platform for AI coding tools. Real-time health scoring, team collaboration, AI-powered insights, and cross-session memory. Track token usage, prevent session failures, and build organizational knowledge. 100% free & open source.

Cal ID

Congrats on the launch!

How does the cross session memory feature improve collaboration between team members working on different AI coding tasks?

@sanskarix Great question! The cross-session memory feature creates a "shared brain" for your team. Here's how it works: Problem it solves: Developer A spends 2 hours debugging a database migration issue in their Claude Code session. Developer B hits the same issue next week - but has no idea A already solved it. How memory helps:

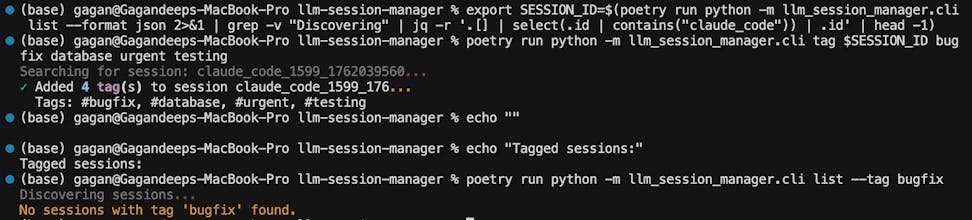

Automatic Knowledge Capture ```bash llm-session memory-add <session-id> "Fixed Prisma migration deadlock by adding transaction isolation" "database bugfix"

Intelligent Search Across ALL Sessions

Returns relevant learnings from ANY team member's sessions, ranked by relevance using AI embeddings.

Context-Aware Recommendations

Analyzes your current session and suggests: "3 teammates worked on similar tasks. Here's what worked..."

Real-world example:

Team working on a React app:

Dev A: "Performance optimization - memoization fixed 200ms render"

Dev B searches: "react performance" → Finds A's solution instantly

Dev C gets auto-recommendation: "Similar component detected, consider memoization"

The magic: It uses vector embeddings, so it finds semantically similar content even with different keywords. Search "API timeout" and it finds sessions about "request hanging" or "slow responses".

Super relevant for teams using AI coding tools! Quick question: do you have any way to flag when AI-generated code might have accessibility issues (missing ARIA, semantic HTML, keyboard nav)?

We've noticed AI tools often miss these details even when they write clean code.

Congrats on the launch!

@halyticai Love this question! Accessibility in AI-generated code is SO important.

Current workaround: Tag sessions with a11y issues for tracking:

Then search/filter across all sessions to find patterns.

What I DON'T have (yet): Automatic detection of accessibility anti-patterns in AI output.

BUT - this is a fantastic feature idea!

Imagine:

Real-time axe-core analysis of AI code suggestions

Alerts: " Missing semantic HTML"

It's fully open source - would you want to collaborate on building this? Your team clearly has the expertise!

Otherwise, I'll add it to the roadmap. This could help SO many teams.

Thanks for the insight!

@gagandeep_singh45 Thanks so much; love hearing how you’re tagging a11y issues today. We’ve been hacking on exactly that missing layer: Halytic runs real‑time axe-core checks on generated code (runtime and CI) and calls out things like missing ARIA labels, landmark structure, etc.

I’d be open to collaborating or getting on an async brainstorm. Happy to share what we’ve built and see if we can upstream an accessibility module into LLM Session Manager. Catching those anti-patterns before they hit prod would be huge for both our communities.

Ping me any time—DM here or email raymond@halytic.ai if you’re interested.