SiliconFlow

One Platform — All Your AI Inference Needs.

24 followers

One Platform — All Your AI Inference Needs.

24 followers

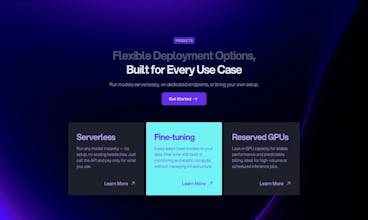

SiliconFlow provides AI infrastructure services, offering API access to a wide range of cutting-edge AI models and scalable cloud deployment solutions for developers and enterprises to build, integrate, and run AI applications efficiently.

@yangpan Is the autoscaling tuned per model type or does it follow a global config

@yangpan @masump It’s per-model (endpoint) first; if a model has no custom settings, it falls back to the global defaults.

siliconflow is an API platform that I really like. It contains a lot of free APIs, some of which are also used by ListenHub, and it supports Pan!

@leofeng Thank you Leo! Truly an honor to power great products like ListenHub on the backend 🙏

Swytchcode

Congrats on the launch! I've glanced over the platform, and it's really awesome.

Would love to connect

@chilarai Thanks for checking it out! Glad you liked the platform — would love to connect and exchange thoughts on how you’re exploring this space.

Swytchcode

@yangpan cool. Already sent you a linkedin connect

This is huge for devs building AI apps. What’s been the biggest challenge in making multi-model inference seamless?

@mohammed_maaz3 Thanks! The biggest challenge has been unifying inference routing across models with very different architectures, latency profiles, and tokenization quirks — while keeping the developer API consistent and latency low.

We’ve spent a lot of time optimizing that layer so it feels truly seamless to devs.